Stress-Testing AI Vision Systems: Rethinking How Adversarial Images Are Generated

January 23, 2026

Researchers develop a new frequency-aware approach to crafting adversarial noise that better matches human visual perception

Deep neural networks (DNNs) have become a cornerstone of modern AI technology, driving a thriving field of research in image-related tasks. These systems have found applications in medical diagnosis, automated data processing, computer vision, and various forms of industrial automation, to name a few. As our reliance on AI models grows, so does our need to test them thoroughly using adversarial examples. Simply put, adversarial examples are images that have been strategically modified with noise to trick an AI into making a mistake. Understanding adversarial image generation techniques is essential for identifying vulnerabilities in DNNs and for developing more secure, reliable systems.

Despite their importance, current techniques for creating adversarial examples have significant limitations. Scientists have mainly focused on making the added noise mathematically small through a constraint known as the Lp-norm. While this keeps the changes subtle, it often results in grainy artifacts that look unnatural because they do not match the textures of the original image. Consequently, even if the noise is small and difficult to see, it can be easily detected and blocked by security pre-filters that look for unusual frequency patterns. A notable challenge in this field thus lies in moving beyond just minimizing the amount of noise and instead crafting adversarial attacks that are even more subtle.

Against this backdrop, doctoral student Masatomo Yoshida and Professor Masahiro Okuda from the Graduate School of Science and Engineering, Doshisha University, Japan, have developed a method to align additive noise in adversarial examples with the “spectral shape” of the image. Their study, published in Volume 13 of the journal IEEE Access on December 24, 2025, introduces an innovative framework called Input-Frequency Adaptive Adversarial Perturbation (IFAP).

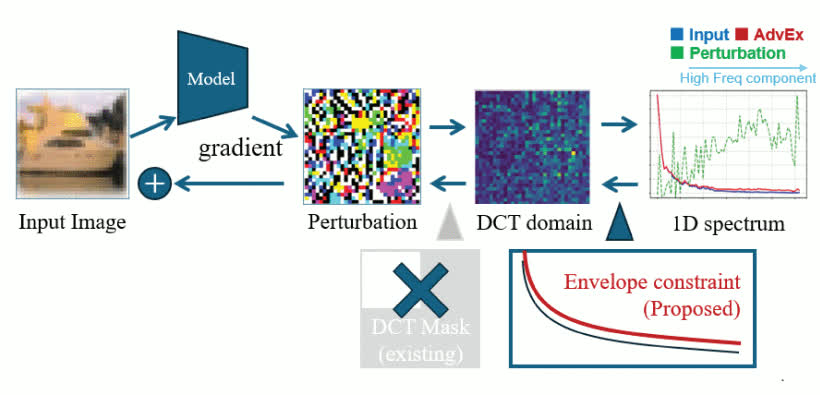

Unlike previous frequency-aware methods that only manipulated specific frequency bands, IFAP uses a new spectral envelope constraint. This allows the added noise to adaptively match the entire frequency distribution of the input image, ensuring the perturbation is spectrally faithful to the original content.

The researchers tested IFAP across diverse datasets, including house numbers, general objects, and complex textures like terrain and fabrics. To assess its performance, they used a comprehensive set of metrics, including a new one they developed called Frequency Cosine Similarity (Freq_Cossim). Whereas standard metrics usually check for pixel-level errors, Freq_Cossim specifically measures how well the shape of the noise’s spectral profile frequency matches that of the original image.

The results showed that IFAP significantly outperformed existing adversarial generation techniques in structural and textural similarity to the source material. Despite being more visually natural and subtle, the adversarial attack remained highly effective, successfully fooling a wide range of AI architectures. Interestingly, the researchers also demonstrated that these harmonized perturbations are more resilient to common image-cleaning techniques, such as JPEG compression or blurring. Because the noise is so well-integrated into the natural textures of the image, it is much harder for simple transformations to eliminate it without significantly altering the image itself.

IFAP has important implications for how adversarial examples are used in AI research. By understanding how to create noise that is consistent with human perception, researchers can implement better adversarial attacks to stress-test and retrain AI models to be more robust. “We believe our work could lead to the development of highly reliable AI models for fields such as medical diagnosis, which will not be confused by slight changes in image quality or noise,” says Prof. Okuda.

Looking ahead, this study sets a new benchmark for how we evaluate AI safety and performance in image-centered tasks. “Evaluation criteria that emphasize consistency with human perception and frequency characteristics, as our research proposes, may become more common in the next 5 to 10 years,” concludes Prof. Okuda. “This shift will likely raise the reliability of AI systems that support important infrastructures of society, such as medical care and transportation.”

Overview of the Proposed Framework

IFAP generates adversarial perturbations using model gradients and then shapes them in the discrete cosine transform (DCT) domain. Unlike existing frequency-aware methods that apply a fixed frequency mask, IFAP introduces an input-adaptive spectral envelope constraint derived from the input image’s spectrum. This constraint guides the perturbation’s full-spectrum profile to conform to the input image, which improves the spectral fidelity of the generated adversarial example while maintaining its attack effectiveness.

Image Credits: Professor Masahiro Okuda from Doshisha University, Japan

Image link: https://ieeexplore.ieee.org/document/11314522

Image license: CC-BY 4.0

Usage restrictions: Credit must be given to the creator.

Reference

| Title of original paper | IFAP: Input-Frequency Adaptive Adversarial Perturbation via Full-Spectrum Envelope Constraint for Spectral Fidelity |

| Journal | IEEE Access |

| DOI | 10.1109/ACCESS.2025.3648201 |

Funding information

This work was supported in part by Japan Society for the Promotion of Science (JSPS) KAKENHI under Grant 25KJ2207 and Grant 23K11174, and in part by Japan Science and Technology Agency (JST) Support for Pioneering Research Initiated by the Next Generation (SPRING) under Grant JPMJSP2129.

EurekAlert!

https://www.eurekalert.org/news-releases/1113572

Profile

YOSHIDA Masatomo

Ph.D. Student,

Graduate School of Science and Engineering

(JSPS DC2)

Masatomo Yoshida received his B.E. and M.E. degrees from Doshisha University, Japan, in 2021 and 2023, respectively. He is currently pursuing a Ph.D. degree in Engineering at the Graduate School of Science and Engineering, Doshisha University. He is also a Research Fellow with the Japan Society for the Promotion of Science (JSPS) and has received a JST SPRING (Support for Pioneering Research Initiated by the Next Generation) Scholarship. His research interests include analyzing spatio-temporal time-series data, image processing, deep learning, and adversarial examples.

OKUDA Masahiro

Professor,

Faculty of Science and Engineering,

Department of Intelligent Information Systems

Masahiro Okuda (Senior Member, IEEE) received the B.E., M.E., and Dr.-Eng. degrees from Keio University, Yokohama, Japan, in 1993, 1995, and 1998, respectively. From 1996 to 2000, he was a Research Fellow with Japan Society for the Promotion of Science. He was with the University of California at Santa Barbara, Santa Barbara, CA, USA, and Carnegie Mellon University, Pittsburgh, PA, USA, as a Visiting Scholar, in 1998 and 1999, respectively. From 2000 to 2020, he was with the Faculty of Environmental Engineering, The University of Kitakyushu, Kitakyushu, Japan. He is currently a Professor with the Faculty of Science and Engineering, Doshisha University. His research interests include image restoration, high dynamic range imaging, multiple image fusion, and digital filter design. He received the SIP Distinguished Contribution Award, in 2013, the IE Award, and the Contribution Award from IEICE, in 2017.

Media contact

Organization for Research Initiatives & Development

Doshisha University

Kyotanabe, Kyoto 610-0394, JAPAN

CONTACT US